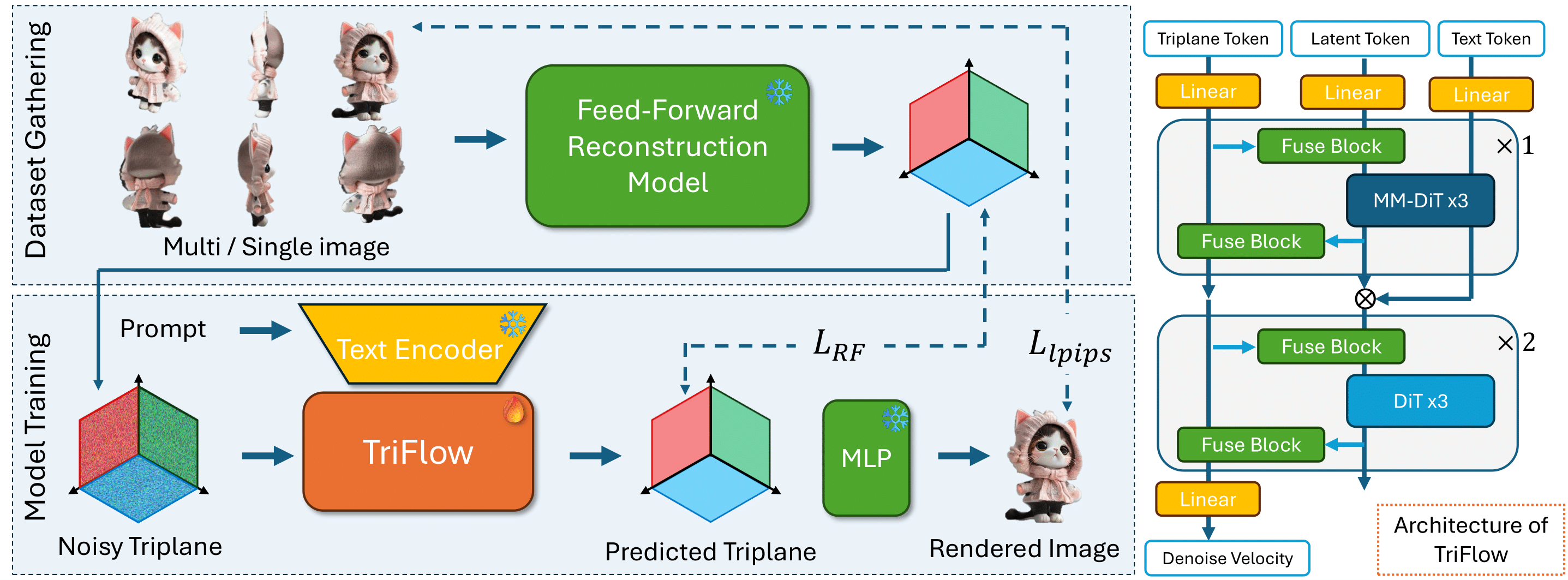

Recent AI-based 3D content creation has largely evolved along two paths: feed-forward image-to-3D reconstruction approaches and 3D generative models trained with 2D or 3D supervision. In this work, we show that existing feed-forward reconstruction methods can serve as effective latent encoders for training 3D generative models, thereby bridging these two paradigms. By reusing powerful pre-trained reconstruction models, we avoid computationally expensive encoder network training and obtain rich 3D latent features for generative modeling for free. However, the latent spaces of reconstruction models are not well-suited for generative modeling due to their unstructured nature. To enable flow-based model training on these latent features, we develop post-processing pipelines, including protocols to standardize the features and spatial weighting to concentrate on important regions. We further incorporate a 2D image space perceptual rendering loss to handle the high-dimensional latent spaces. Finally, we propose a multi-stream transformer-based rectified flow architecture to achieve linear scaling and high-quality text-conditioned 3D generation. Our framework leverages the advancements of feed-forward reconstruction models to enhance the scalability of 3D generative modeling, achieving both high computational efficiency and state-of-the-art performance in text-to-3D generation.

@article{wizadwongsa2024triflow,

author = {Wizadwongsa, Suttisak and Zhou, Jinfan and Li, Edward and Park, Jeong Joon},

title = {Taming Feed-forward Reconstruction Models as Latent Encoders for 3D Generative Models},

journal = {arxiv},

year = {2024},

}